Call for Abstract

Scientific Program

6th Global Summit on Artificial Intelligence and Neural Networks, will be organized around the theme “Harnessing the power of Artificial Intelligence”

Neural Networks 2018 is comprised of 17 tracks and 74 sessions designed to offer comprehensive sessions that address current issues in Neural Networks 2018 .

Submit your abstract to any of the mentioned tracks. All related abstracts are accepted.

Register now for the conference by choosing an appropriate package suitable to you.

- Track 1-1Artificial Narrow Intelligence

- Track 1-2Artificial General Intelligence

- Track 1-3Artificial Super Intelligence

- Track 1-4Natural Language Processing

- Track 1-5Fuzzy Logic System

- Track 1-6Artificial Intelligence and Computer Vision

Cognitive Computing refers to the hardware and/or software that helps to improve human decision-making and mimics the functioning of the human brain. It refers to systems that can learn at scale, reason with purpose and interact with humans naturally. It comprises of software libraries and machine learning algorithms for extracting information and knowledge from unstructured data sources. The main is to accurate models of how the human brain/mind senses, reasons, and responds to stimulus. High performance computing infrastructure is powered by processors like multicore CPUs, GPUs, TPUs, and neuromorphic chips. They interact easily with users, mobile computing and cloud computing services so that those users can define their needs comfortably.

Neural Informatics for Cognitive Computing

Neural Information theory is a multidisciplinary enquiry of the physiological and biological representation of knowledge and information in the brain at the neuron level.

- Track 2-1AI And Signal Processing

- Track 2-2Natural Language Interaction

- Track 2-3Cognitive Assistant for Visually Impaired

- Track 2-4Speech Recognition & Face Detection

- Track 2-5Big Data and Cognitive Computing

Pattern recognition is a part of machine learning that focuses on the recognition of patterns and regularities in data by using supervised learning algorithms that create classifiers based on training data from different object classes. Optical character recognition (OCR) faces detection, face recognition, object detection, and object classification uses supervised pattern recognition. And the unsupervised learning works by finding hidden structures in clustering techniques.

Feature selection or variable selection, is the process of selecting a subset of relevant features for use in model construction. They are also used to simplify the models to make them easier to interpret, shorter training times and enhanced generalisation by reducing over fitting (reduction of variables). The data contains many features that are either redundant or irrelevant, and can be removed without incurring much loss of information.

- Track 3-1Face Recognition Using Artificial Neural Network

- Track 3-2Non Parametric Neural Network

- Track 3-3Self-Organizing Neural Network For Non-Parametric Regression Analysis

- Track 3-4Recurrent Neural Networks And Reservoir Computing

Artificial neural networks uses backpropagation method to calculate the error contribution of each neuron after a batch of data (in image recognition) is processed. It is a special case of an older and more general technique called automatic differentiation. It is commonly used by the gradient descent optimization algorithm to adjust the weight of neurons by calculating the gradient of the loss function. This technique is also sometimes called backward propagation of errors, because the error is calculated at the output and distributed back through the neural network layers.

- Track 4-1Back-propagation for nonlinear self-tuning adaptive control

- Track 4-2Control chart pattern recognition using backpropagation

- Track 4-3Backpropagation Neural Network Implementation for Medical Image Compression

- Track 4-4Time series forecasting using backpropagation neural networks

- Track 4-5Backpropagation Neural Network in Tidal-Level Forecasting

Machine learning is the field of computer science which teaches machines to detect different patterns and to adapt to new circumstances. Machine Learning can be both experience and explanation based learning. In the field of robotics machine learning plays a vital role, it helps in taking an optimized decision for the machine which eventually increases the efficiency of the machine and more organized way of preforming a particular task. It is employed in a range of computing tasks where designing and programming with good performance is difficult or infeasible for example email filtering, network detection or malicious insiders working towards a data breach optical character recognition (OCR) and computer vision.

Data mining is an extraction process of useful patterns and information from huge data. It is also called as knowledge discovery process, knowledge mining from data, knowledge extraction or data /pattern analysis. It is a logical process that is used to search through large amount of data in order to find useful data. The goal of this technique is to find patterns that were previously unknown.

- Track 5-1Machine Learning And Data Mining

- Track 5-2Clustering And Predictive Modeling

- Track 5-3Parametric and Non-parametric Machine Learning Algorithms

Artificial Neural Networks are the simulations which performs specific tasks like pattern recognition, clustering etc. on computer.

Artificial neural networks are mathematical models inspired by the organization and functioning of biological neurons. They are similar to the human brains, acquire knowledge through learning and their knowledge is stored within inter neuron connections strengths known as synaptic weights.

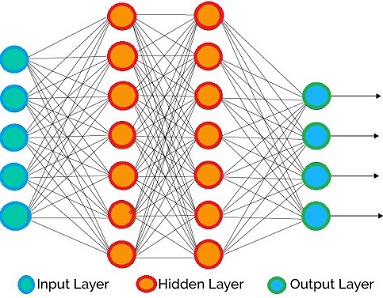

Architecture of ANN

A large number of artificial neurons called units arranged in a series of layers are contained by neural networks. Different layers are Input layer, Output layer and Hidden layer.

- Input layer contains those units which receives input from outside world on which network will learn and recognise about the process.

- Output layer contains units that respond to the information about how it’s learned any task.

- Hidden layer are in between input and output layers. It transforms the input in to something that output unit can use in some ways.

- Track 6-1Advances in Artificial Neural Systems

- Track 6-2ANN Controller for automatic ship berthing

- Track 6-3ANN in Estimating Participation in Electrons

- Track 6-4Adaptive Neuro Fuzzy Interface System

- Track 6-5Parallel growing and training of neural networks using ouput parallelism

Deep learning also known as deep structured learning is a part of machine learning process based on learning data representation. It uses some form of gradient descent for training via backpropagation.

The layers used in deep learning include hidden layers of artificial neural networks and sets of propositional formulas.

Deep Neural Network

DNN is an ANN with multiple hidden layers between the input and output layers. DNN architectures generate compositional models where the object is expressed as a layered composition of primitive. They are typically feed forward neural networks in which data flows from the input layer to the output layer without looping back.

Applications

- Automatic speech recognition

- Image recognition

- Visual art processing

- Natural language processing

- Track 7-1Deep Feed forward Networks

- Track 7-2Autoencoders

- Track 7-3Optimization for training deep neural network models

- Track 7-4Sequence Modeling: Recurrent and Recursive Nets

- Track 8-1Data Fusion between Physical and Digital Worlds

- Track 8-2Occupancy Modeling for Smart Buildings

- Track 8-3Context-driven processing and inference

- Track 8-4Interactive machine learning

- Track 8-5Smart homes and media convergence

Perceptron is a machine learning algorithm that helps to provide classified outcomes for computing. It is a kind of a single-layer artificial network with only one neuron and a classification algorithm that makes its predictions based on a linear predictor function combining a set of weights with the feature vector.

Multilayer Perceptron

Multilayer Perceptron is a class of feed forward artificial neural networks. And the layered feed forward networks are trained by using the static back-propagation training algorithm. For designing and training an MLP perceptron several issues are involved:

- Number of hidden layers is selected to use in the neural network.

- A solution that avoids local minima is globally searched.

- Neural networks are validated to test for overfitting.

- Converging to an optimal solution in a reasonable period of time.

- Track 9-1Multilayer Perceptrons and Kemel Networks

- Track 9-2Multilayer Perceptron Neural Network for flow prediction

- Track 9-3Probability matching in Perceptrons

- Track 9-4Backpropagation algorithm for the on-line training of Multilayer Perceptrons

Cloud computing is branch of information technology which grants universal access to shared pools of virtualised computer resources. A cloud can host different workloads, allows workloads to be scaled/deployed-out on-demand by rapid provisioning of physical or virtual machines, self-recovering, supports redundant, and highly-scalable programming models and allows workloads to recover from hardware/software rebalance and failures allocations.

Artificial Intelligence technology plays a very important role in Making resources available, Distribution transparency and Openness Scalability especially for Cloud Computing Application. Artificial intelligence and cloud computing will have an important impact on the development of information technology by mutually collaborating.

- Track 10-1Secure data management within and across data centers

- Track 10-2Cloud Cryptography

- Track 10-3Cloud access control and key management

- Track 10-4Integrity assurance for data outsourcing

- Track 10-5Software and data segregation security

Autonomous robots are the intelligently capable machines which can perform the task under the control of a computer program. They are independent of any human controller and can act on their own. The basic idea is to program the robot to respond a certain way to outside stimuli. The combined study of neuroscience, robotics, and artificial intelligence is called neurorobotics.

Application and their classification-

The autonomous robots are classified into four types:-

- Programmable: Swarm Robotics, mobile robots, industrial controlling and space craft.

- Non- Programmable: Path guiders and medical products carriers.

- Intelligent: Robotics in Medical military applications and home appliance control systems.

- Adaptive: Robotic gripper, spraying and welding systems.

- Track 11-1Hybridization of Swarm Intelligence techniques, with applications to robotics or autonomous complex systems

- Track 11-2Soft Computing for Robotics

- Track 11-3Formation control for Autonomous Robots

- Track 11-4Advances in Autonomous Mini Robots

Support Vector Machines are the set of related supervised machine learning algorithm capable of delivering higher performance in terms of classification and regression accuracy. In this algorithm each data item is plotted as a point in n-dimensional space (where n is number of features you have) with the value of each feature being the value of a particular coordinate. SVM utilises an optimum linear separating hyper plane to separate two data sets in a feature space. This optimum hyper plane is produced by maximizing minimum margin between the two sets. Therefore the resulting hyper plane will only be depended on border training patterns called support vectors.

- Track 12-1Support Vector Machines for Transduction

- Track 12-2Support Vector Machines for Predictive Modeling in Heterogeneous Catalysis

- Track 12-3Fuzzy Support Vector Machines

- Track 12-4Kernel Methods and Support Vector Machines

Parallel Processing reduces processing time by simultaneously breaking up and running program tasks on multiple microprocessors. There are more engines (CPUs) running, which makes the program run faster. It is particularly useful when running programs that perform complex computations, and it provides a viable option to the quest for cheaper computing alternatives. Supercomputers commonly have hundreds of thousands of microprocessors for this purpose. Parallel programming is an evolution of serial computing where the jobs are broken into discrete parts that can be executed concurrently. It is further broken down to a series of instructions and the instructions from each part execute simultaneously on different CPUs.

- Track 13-1Super computing / High Performance Computing (HPC)

- Track 13-2General purpose computing on graphics processing units

- Track 13-3Adaptive Sequential Posterior Simulators

- Track 13-4Re-optimizing Data-Parallel Computing

-

AI algorithms to be used for keeping records.

-

Helping Interpret Large amount of data by using computer technology.

-

Choosing a particular method for analysing data.

- Track 14-1Computational Evolutionary Biology

- Track 14-2DNA Sequencing with Artificial Intelligence

- Track 14-3Genetics and Genomics

- Track 14-4Intelligent Systems in Bioinformatics

- Track 14-5Prediction of Protein Analysis

Ubiquitous computing is a branch of computing in computer science and software engineering where computing is made easier so that they can appear anytime and everywhere. It can occur using any device, in any location, and in any format.

Key features include:

- Use of Inexpensive processors which reduces the storage and memory requirements.

- Totally connected and constantly available computing devices and capturing of real time attributes.

- Focus on many-to-many relationships, instead of one-to-one, many-to-one or one-to-many in the environment, along with the idea of technology, which is constantly present.

- Relies on wireless technology, converging Internet and advanced electronics.

- Track 15-1System support infrastructures and servies

- Track 15-2Middleware services and agent technologies

- Track 15-3User interfaces and interaction models

- Track 15-4Wireless/mobile service management and delivery

- Track 15-5Wearable computers and technologies

- Track 15-6Interoperability and wide scale deployment

- Track 16-1Advanced NLG Systems

- Track 16-2Natural Language Understanding and Interpretation

- Track 16-3Approaches of NLP for Linguistic Analysis

- Track 16-4NLP for Understanding Semantic Analysis

- Track 16-5NLP for Advanced Text Analysis